🐦 RWKV v6 Finch 14B is here!

From 14B, 7B, 3B, 1.6B here are the various RWKV v6 models

Announcing the latest RWKV model: Finch 14B!

Finch is the 6th and latest version of the RWKV architecture, succeeding the Eagle / v5 lines of models. Finch improves upon Eagle by introducing data-dependence into the token shift and time-mixing, making Finch more efficient in managing its “long-term memory” as it processes a prompt, thereby giving it better range.

The Finch architecture is covered in detail alongside Eagle in https://arxiv.org/pdf/2404.05892 and Finches smaller than 14B have been appearing throughout the 2024 calendar year, with 14B representing the largest Finch trained to date (also the largest RWKV model - 7B was the maximum size trained of Eagle).

Training details and Evals

Both Finch 7B and Finch 14B are derived from continuing training of the Eagle 7B weights on the same dataset (known as World v2.1, the constituents of which are described here). The 14B model is derived from stacking two copies of the 7B model. Stacking effectively increases the short-term memory of the model (i.e. how much of the exact prompt feeds into the NN layers at each level) which has a different effect than widening the model.

We evaluated the Finch models using https://github.com/RWKV/lm-evaluation-harness, a fork of the standard LLM evaluation framework which also powers HuggingFace’s Open LLM Leaderboard (fork only to make the harness work via automation).

We ran a wide variety of benchmarks (235 in total), attempting to maximize breadth, while managing computation time (each of the models took 2 days to eval in our setup (!)).

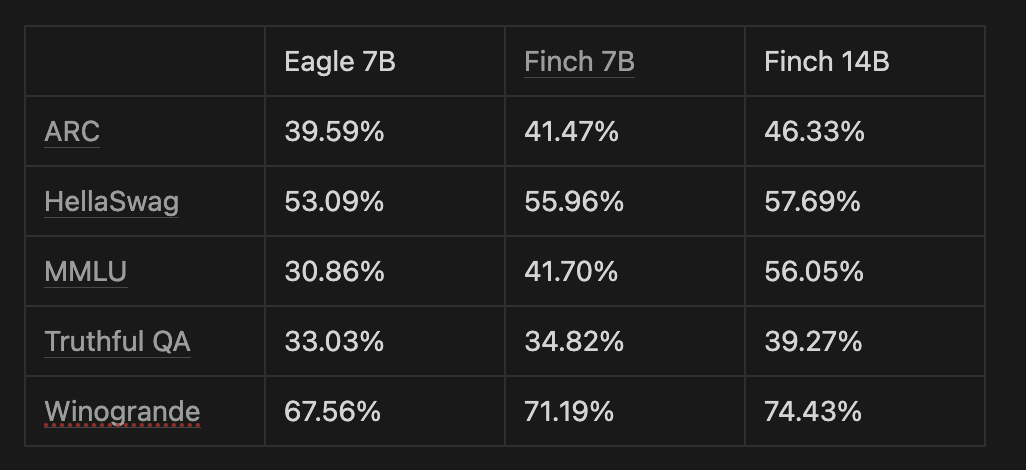

Finch 7B improved +5.38% across all benchmarks while Finch 14B improved an additional +7.14% across all benchmarks (both figures relative to Eagle 7B). Given that Eagle 7B was the starting point for training for both models, the fact that there was increase is a given; the amount of increase is evidence of the value of Finch’s architectural changes, as well as that the depth of the model is not saturated by our data-set run (of 1.42T tokens).

If we focus specifically on the Open LLM Leaderboard v1 benchmarks, we see

Contributing GPU cluster time to RWKV!

RWKV is an open source project recognized by the Linux Foundation. There are various bottlenecks to the project, but GPU time is one of them, and we gratefully accept donations. If your organization has idle time please reach out at eugene@rwkv.com or nathan@rwkv.com to explore a donation and learn about what kinds of training runs those spare cycles could power.

References

Model weights:

Hosted inference: https://featherless.ai/models/RWKV/Finch-14B

Training code: https://github.com/BlinkDL/RWKV-LM