RWKV-6 Finch 7B World 3 now with 3.1T tokens trained!

Moar training, moar capable!

RWKV-6 model: Finch 7B World 3

Now trained with an expanded and improved multilingual dataset, the latest Finch World 3 is the most capable 7B parameter class RWKV model yet! And you can use it today from either HuggingFace or the ChatRWKV inference runtime

Our goal is always to provide high-quality open-source AI models for everyone worldwide, regardless of nationality, language, or economic status. The RWKV architecture is designed to help reduce our impact on the environment, using a fixed amount of power per token regardless of context length. We invite interested developers to help us shape its future on the RWKV Discord server

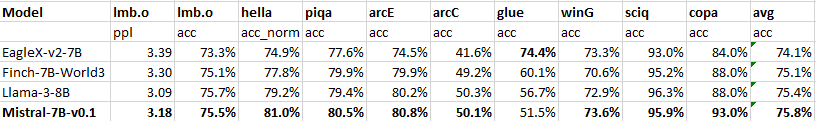

Eval and benchmark

We tested Finch 7B World 3 using the EleutherAI lm-evaluation-harness across various typical industry benchmarks. Downstream performance improved significantly, now strongly beating Llama2 7B (trained on 2 trillion tokens) and closing in on Mistral 7B v0.1 and even Llama3 8B. We had theorized that the total tokens trained was the major difference between RWKV-6 models and modern Transformers, and seeing the continued performance improvement from further training reinforces that view. Llama3 8B is a larger model trained on 15 trillion tokens - nearly five times as many as Finch World 3 - and yet, the scores are close!

We’re looking forward to sharing the upcoming results from our new RWKV-7 architecture “Goose”, which may finally match or eclipse the modern transformer on a tokens-trained basis.

You can find the Finch architecture details in the Eagle and Finch research paper, recently presented at the Conference on Language Modelling.

Finch 7B World 3 has now been trained on a total of 3.1 trillion multilingual tokens. The training was accomplished in two steps: First, the original 1.1 trillion token Eagle (RWKV-5) checkpoint was upgraded to Finch (RWKV-6) and trained up to 1.4 trillion tokens with an expanded World v2.1 dataset. Then, the dataset was expanded again and training was continued for up to a total of 3.1 trillion tokens.

We added the following dataset (in addition to the original World 2 dataset details listed in the Eagle and Finch research paper) for the World V3 dataset.

Added in World v2.1

• cosmopedia

• adjustments to slimpajama inclusions

• dolma v1.6 reddit

• Magpie-Align

• glaiveai_glaive-code-assistant-v3

• cognitivecomputations_SystemChat-2.0_SystemChat

• migtissera_Tess_tess-v1.5

• openbmb_UltraInteract_sft

• m-a-p~Code-Feedback~Code-FeedbackAdded in World v3

• fineweb-edu

• DCLM

• cosmopedia-v2

• Buzz-V12

• WebInstructSub

• SKGInstruct

• math-ai

• TemplateGSM

• all of starcoder

• python-edu (in HuggingFaceTB/smollm-corpus)For the upcoming RWKV-7 “Goose” training runs, we will be improving and expanding the tokenizer to efficiently handle more world languages, and adding even more new dataset components.

Try out Finch World 3 today!

Acknowledgments

A big thank you to the following groups, who were instrumental in the continued development of the RWKV architecture and models:

Recursal AI for its commitment to providing resources and development for the RWKV ecosystem - you can use their featherless.ai platform to easily run RWKV and compare to it, other language models

EleutherAI for support and guidance, especially on benchmarks and publishing research papers about the RWKV architecture

Linux Foundation AI & Data group for supporting and hosting the RWKV project

And of course, a huge thank you to the many developers around the world working hard to improve the RWKV ecosystem and provide environmentally friendly open-source AI for all.