🐣 RWKV v5 1.5B - Achieves SOTA multi-lingual performance

The best AI model in the smol <2B param weight class has arrived

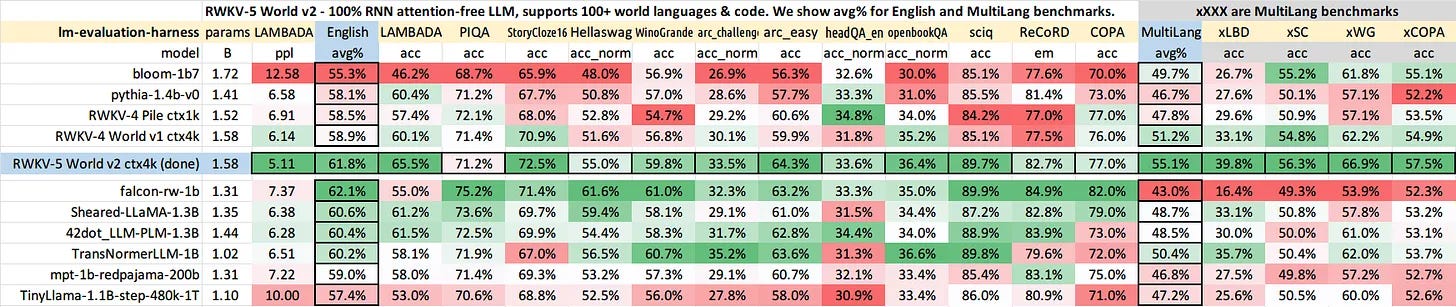

RWKV v5 1.5B achieves SOTA status with

Industry leading multi-lingual performance (across xLBD, xSC, xWG, xCOPA benchmarks) by significant margins, against all existing models

Comparable performance to falcon-rw-1b in english based benchmark

We win out in LAMBDA, StoryCloze16, arch_challenge, arc_easy, headQA_en, openbookQA, sciq, COPA

but looses out very slightly on PIQA, Hellaswag, WinoGrade,ReCoRD, COPA

For nearly all use cases under the 2B param model class, RWKV V5 now represents either the best model for multi-lingual use, or a tied 1st place model with falcon-rw-1b

Making this a strong default model of choice within its weight class.

A pattern we intend to repeat in the 3, 7, and 14B weight classes respectively. We expect the 3B model to be out by first week december.

You can access the model today via the following options

Model Download : https://huggingface.co/BlinkDL/rwkv-5-world/tree/main

This is a repost of a past event, prior to the setup of this blog