🚀 RWKV.cpp - shipping to 1.5 billion systems worldwide

We went from ~50k installation, to 1.5 billion. On every windows 10 and 11 computer, near you (even the ones in the IT store)

Silently overnight, it’s everywhere, in every Windows 10 and 11 PC.

Or more specifically, “windows 11: version 23H2” and “windows 10: version 22H2” …

C:\Program Files\Microsoft Office\root\vfs\ProgramFilesCommonX64\Microsoft Shared\OFFICE16Today, you can literally walk into your local IT store, find a laptop with Windows 11 copilot, and search rwkv (enable system files), and find the files there.

With an estimated, half a billion, windows 11, and 1 billion windows 10 installations. This marks the largest rollout for RWKV in terms of installation 🤯

Is it real?

To validate the binaries, we have since decompiled them, to verify that they are based on the RWKV.cpp project, supporting up to version 5 of our models (we are currently on version 6).

So yes, it is real.

Our project is Apache 2 licensed, Microsoft is allowed to do this. (assuming proper Apache 2 license attribution)

What is Microsoft using it for?

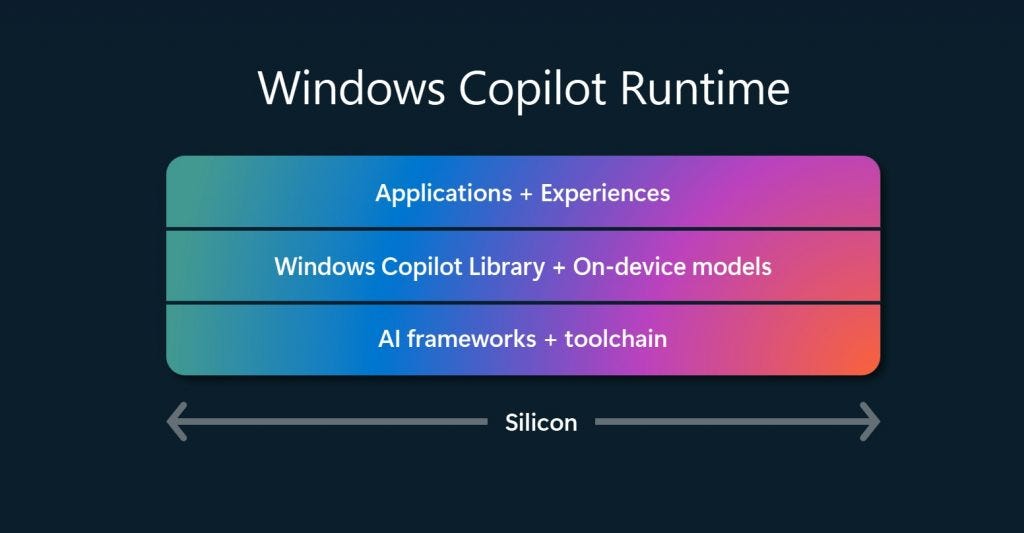

While it’s unclear what Microsoft is specifically using our models for, it is believed, this is in preparation for local Co-pilot running with on-device models

RWKV's biggest advantage is its ability to process information like a transformer model, at a fraction of the GPU time, and energy cost. Making it one of the world’s greenest model

The AI model energy usage, is critical, for a laptop’s battery life.

RWKV is probably used in combination with the Microsoft phi line of models (which handles image processing), to provide

low computation, batch processing in the background (MS recall)

general-purpose chat (though this is probably the phi model)

Its main advantages are its low energy cost and language support.

Fingers crossed on the rollout

For now, until the roll-out of offline co-pilot into the Microsoft operating system and/or Office 365. We will be keeping tabs, to see how our models are deployed into Windows.

We are excited to see what is next, as we scale out the deployment for the RWKV open source foundation model.

Change note: the article was originally citing 0.5 billion which is the estiamted size of windows 11 deployment.

It has been updated to include windows 10, as we have gotten confirmation for it as well.